Let's connect to ChatGPT from PHP, and see what the possibilities really are. Below, we will dive right into the topic. We will try to keep everything as easy to understand as possible, and keep the details separate from the "just get it done" approach.

To start off, you will need an OpenAI account and a payment method assigned to it. This is needed because this API is not free for use, and costs a little bit of money ($0.002 per 1000 tokens to be exact - more on this later).

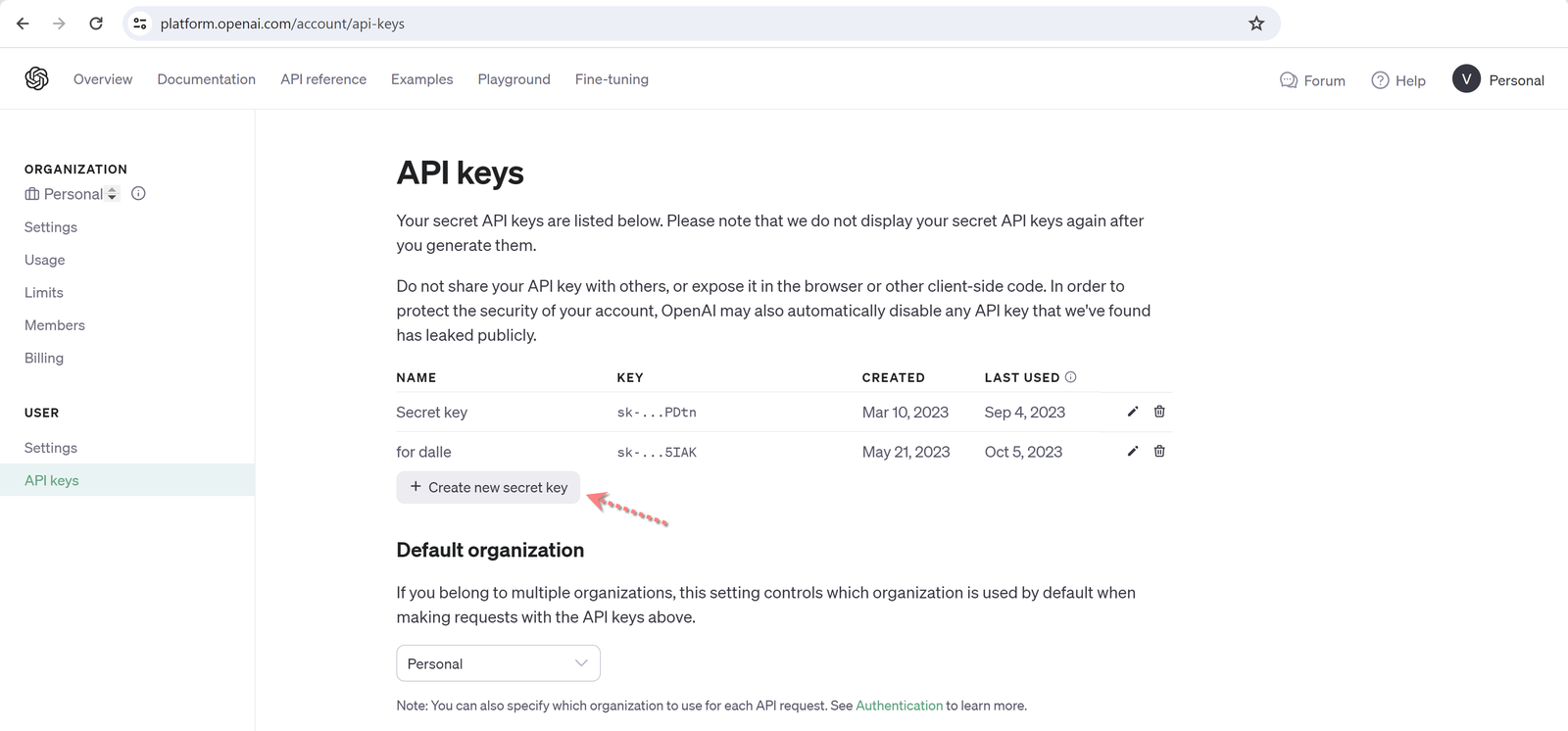

Get your OpenAI API Key

Below are the steps to get your OpenAI API key as screenshots. This is the OpenAI page where you have to go for this.

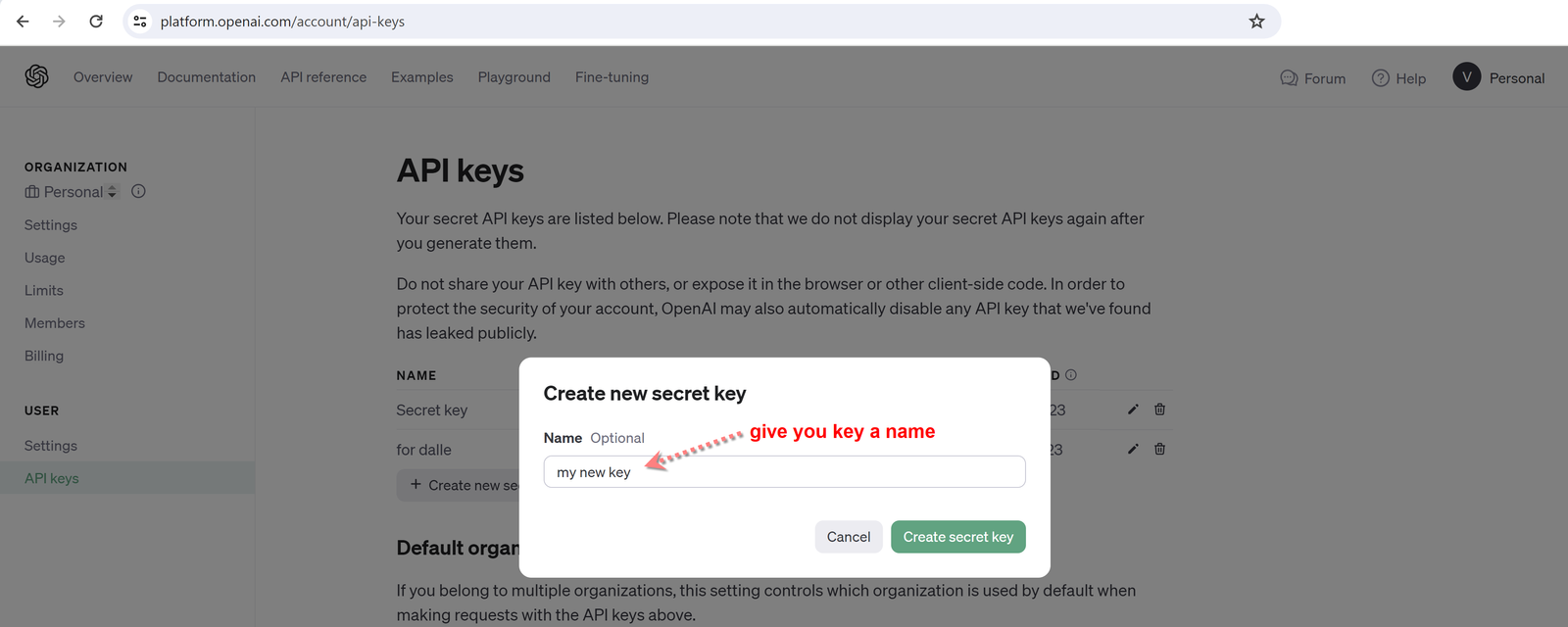

Click on "Create new secret key", and give your key a name. This can really be anything.

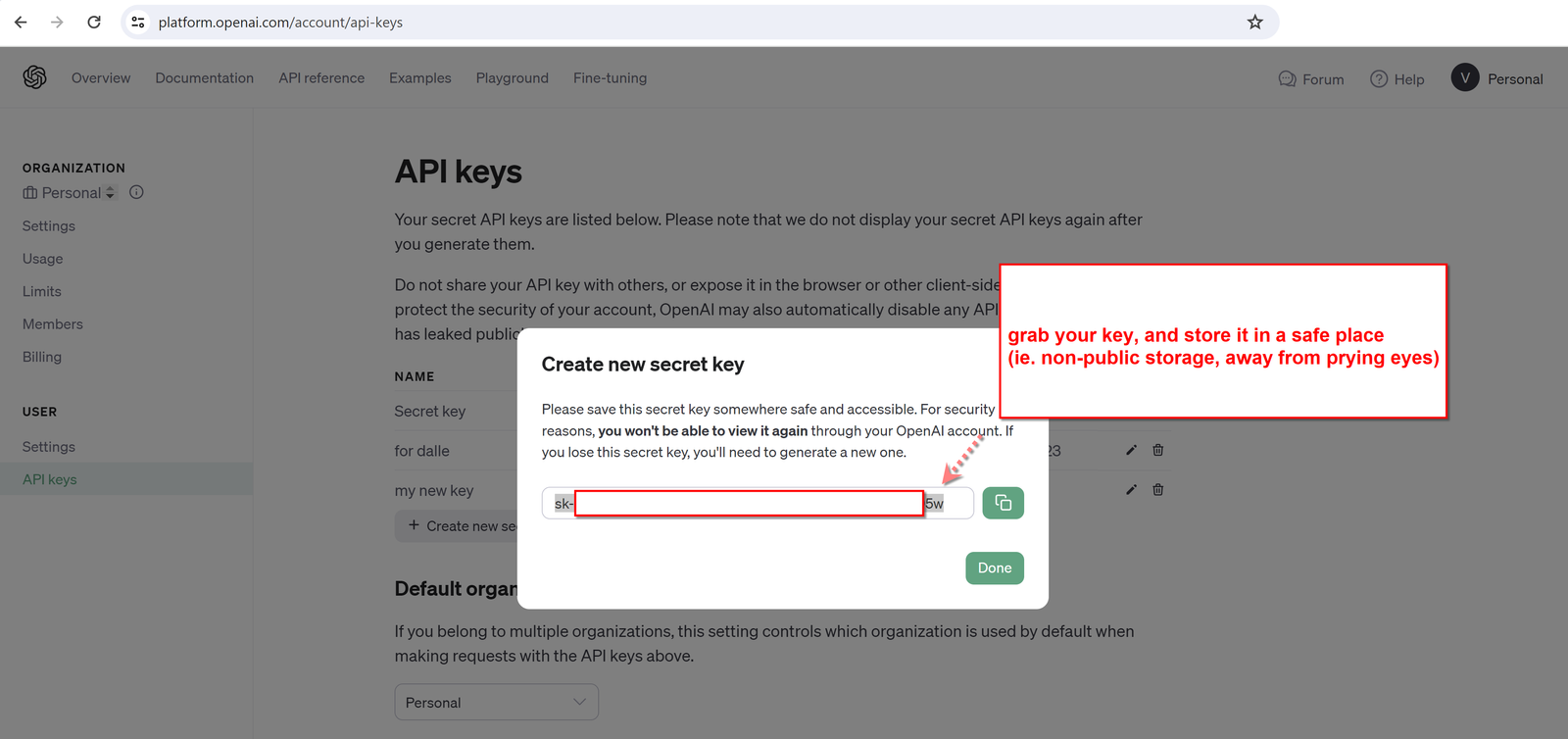

Now, copy your key and store it in a safe place. BTW, all OpenAI API keys start with "sk-", which stands for "secret key".

Simple PHP Client For ChatGPT

Below is an implementation for a very simple PHP client, which you can use to make a connection to ChatGPT.

In case you are wondering - is the whole of ChatGPT really just one API endpoint? Yes, it is.

<?php

class ChatGPTSimpleClient {

//get your api key here: https://platform.openai.com/account/api-keys

private static $open_ai_key = 'your-openai-chatgpt-api-key-goes-here';

private static $open_ai_url = 'https://api.openai.com/v1'; //current version of the API endpoint

/**

* Doc: https://platform.openai.com/docs/api-reference/chat/create

* @param array $messages (each item must have "role" and "content" elements, this is the whole conversation)

* @param int $maxTokens maximum tokens for the response in ChatGPT (1000 is the limit for gpt-3.5-turbo)

* @param string $model valid options are "gpt-3.5-turbo", "gpt-4", and in the future probably "gpt-5"

* @param int $responseVariants how many response to come up with (normally we just want one)

* @param float $frequencyPenalty between -2.0 and 2.0, penalize new tokens based on their existing frequency in the answer

* @param int $presencePenalty between -2.0 and 2.0. Positive values penalize new tokens based on whether they appear in the conversation so far, increasing the AI's chances to write on new topics.

* @param int $temperature default is 1, between 0 and 2, higher value makes the model more random in its discussion (going on tangents).

* @param string $user if you have distinct app users, you can send a user ID here, and OpenAI will look to prevent common abuses or attacks

*/

public static function chat($messages = [], $maxTokens=2000, $model='gpt-4', $responseVariants=1, $frequencyPenalty=0, $presencePenalty=0, $temperature=1, $user='') {

//create message to post

$message = new stdClass();

$message -> messages = $messages;

$message -> model = $model;

$message -> n = $responseVariants;

$message -> frequency_penalty = $frequencyPenalty;

$message -> presence_penalty = $presencePenalty;

$message -> temperature = $temperature;

if($user) {

$message -> user = $user;

}

$result = self::_sendMessage('/chat/completions', json_encode($message));

return $result;

}

private static function _sendMessage($endpoint, $data = '', $method = 'post') {

$apiEndpoint = self::$open_ai_url.$endpoint;

$curl = curl_init();

if($method == 'post') {

$params = array(

CURLOPT_URL => $apiEndpoint,

CURLOPT_SSL_VERIFYHOST => false,

CURLOPT_SSL_VERIFYPEER => false,

CURLOPT_RETURNTRANSFER => true,

CURLOPT_MAXREDIRS => 10,

CURLOPT_TIMEOUT => 90,

CURLOPT_HTTP_VERSION => CURL_HTTP_VERSION_1_1,

CURLOPT_CUSTOMREQUEST => "POST",

CURLOPT_NOBODY => false,

CURLOPT_HTTPHEADER => array(

"content-type: application/json",

"accept: application/json",

"authorization: Bearer ".self::$open_ai_key

)

);

curl_setopt_array($curl, $params);

curl_setopt($curl, CURLOPT_POSTFIELDS, $data);

} else if($method == 'get') {

$params = array(

CURLOPT_URL => $apiEndpoint . ($data!=''?('?'.$data):''),

CURLOPT_SSL_VERIFYHOST => false,

CURLOPT_SSL_VERIFYPEER => false,

CURLOPT_RETURNTRANSFER => true,

CURLOPT_MAXREDIRS => 10,

CURLOPT_TIMEOUT => 90,

CURLOPT_HTTP_VERSION => CURL_HTTP_VERSION_1_1,

CURLOPT_CUSTOMREQUEST => "GET",

CURLOPT_NOBODY => false,

CURLOPT_HTTPHEADER => array(

"content-type: application/json",

"accept: application/json",

"authorization: Bearer ".self::$open_ai_key

)

);

curl_setopt_array($curl, $params);

}

$response = curl_exec($curl);

curl_close($curl);

$data = json_decode($response, true);

if(!is_array($data)) return array();

return $data;

}

}

Sending an Example Message to ChatGPT

We will immediately follow up on the simple client, with a simple use of it:

<?php

include_once('./ChatGPTSimpleClient.php');

$response = ChatGPTSimpleClient::chat([

//OPTIONAL - explain to ChatGPT, what is its role in this conversation

[

"role" => "system",

"content" => "You help companies add AI to their internal processes."

],

//OPTIONAL - Give the conversation a "starting point", pretend like the user asked a question already

[

"role" => "user",

"content" => "How can AI help my company?"

],

//OPTIONAL - Give what would have been a great answer from ChatGPT... (this lets the LLM learn the context a bit)

[

"role" => "assistant",

"content" => "AI products such as ChatGPT, are able to act like virtual assistant, and support every employee with useful tips and information."

],

//REQUIRED - The latest "prompt" to ChatGPT, this is what we really want the AI to answer

//NOTE - without the previous context (company AI processes), this question below would have little meaning.

[

"role" => "user",

"content" => "Which common tasks can you help with?"

]

]);

print_r($response);

In the above, please note that we have a few optional items in the converstation object, which are all there to provide ChatGPT the context. Without this, asking a question like "which common tasks can you help with?" will most likely result in a nonsensical answer.

And let's have a look at the response from ChatGPT below (notice how it is really using the whole conversation, and not just the last question from the user):

Array

(

[id] => chatcmpl-8Gw5NvrwW7rvrT1vop7fWbigvkoFp

[object] => chat.completion

[created] => 1699047221

[model] => gpt-4-0613

[choices] => Array

(

[0] => Array

(

[index] => 0

[message] => Array

(

[role] => assistant

[content] => AI can help automate a wide range of tasks, such as:

1. Customer Service: AI can help with automating responses to common customer inquiries, allowing your customer service representatives to focus on more complex issues.

2. Data Analysis: AI algorithms can process and analyze massive amounts of data far more efficiently than humans can. They can identify trends and patterns that can help in decision-making.

3. Sales and Marketing: AI can help segment your customer base, personalize communication, predict future trends, and even automate parts of the sales process.

4. HR and Recruitment: AI can help sift through resumes, perform background checks, schedule interviews, and even analyze facial expressions and voice tones during interviews to assess candidate fit.

5. Operations: AI can help with inventory management, supply chain optimization, facilities management, and more.

6. Finance: AI can automate many financial tasks such as invoicing, payroll, tax preparation, etc.

7. IT management: AI can help in identifying and rectifying IT issues, managing IT infrastructure, etc.

In addition, AI can learn from its previous interactions, constantly improving its performance and accuracy over time.

)

[finish_reason] => stop

)

)

[usage] => Array

(

[prompt_tokens] => 70

[completion_tokens] => 228

[total_tokens] => 298

)

)

How Fast Does The ChatGPT API Respond?

Surprisingly, the ChatGPT API is not fast at all. The above example takes a whopping 22 seconds to run, and all of this time is spent waiting for the response. The performance is improving with newer versions, but we really wish this improvement was faster.

There is an opportunity for buffering the response, but this is far from ideal. If you plan on developing APPs, there will have to be clever solutions to keep the user entertained while they wait.

How Much Did The Above ChatGPT Request Cost?

In the response, at the bottom we can see we used 70 tokens for the prompt, 228 tokens were used for the answer and the total was 298.

The total cost for this one request was 298 / 1000 * 0.002 = $0.000596.

In other words, it will take 1678 such requests to reach a bill of $1. This is not too bad actually, but please take care to not run into an infinite loop and leave it running for a while.

What Are ChatGPT Tokens and What Are The Limits?

We have this article devoted to describing tokens and limits, but in short, a ChatGPT token is a 4-character string. The definition gets much more nuanced - but this is a generally acepted rule of thumb.

In theory, GPT-4 is limited to 2000 tokens per request and response, however in practice this limit is often much lower at just 1000.

Explaining the ChatGPT Conversation Object

This object is really a list of questions and answers, with a different role per item. The three main roles are:

- system: Any conversation contects or prerequisites go here

- assistant: this is ChatGPT, basically the AI trying to help the user

- user: the end user asking questions

Notice in the above example, we used the "system" role to explain to ChatGPT that its current role is to help companies use AI. Some other examples might be:

- You analyze sales data at companies

- You help companies achieve marketing goals

- etc

Next, the "content" of each conversation item, is including just a plain text description or prompt or response.

Here is what's important: the conversation object includes the the whole conversation as a chain. It gives ChatGPT the full context to use for the next answer. Once the answer comes, you as the developer can add the answer to the chain, and add the next user question at the end, thereby continuing the conversation. As the conversation continues, the conversation object will grow, and your follow-up questions will become more expensive. Eventually, once the conversation object reaches a certain length (~2k tokens for GPT-4), you will get an error from the API.

Most Useful Parameters To Know About

For us, the following were the most useful parameters to change so far:

- model: "gpt-3.5-turbo", "gpt-4", and in the future probably "gpt-5".

- maxTokens: this is the maximum tokens we want for the answer from ChatGPT. 2000 will let the LLM be verbose, and smaller numbers will force it to be more concise.

- user: in case your app has users, it's a good idea to send a user Hash here - and OpenAI may block abusers of the API on your behalf. (think DDOS attackers, or inappropriate prompts)

All of the other parameters are in alright to just leave as default. But you are free to change them too, and see how ChatGPT changes its behavior.

Conclusion

At this point, the above tutorial should have provided you with enough details to get you started on a connection from PHP to ChatGPT. We tried to keep the code short and non-abstracted. If you made it this far, you will likely want to sign up to the OpenAI Developer Waitlist to create some plugins for the ecosystem. Here is also a tutorial for using vector embeddings to search texts (it's a good one).

Feel free to drop a comment or question below.